At Punchporn, nothing is more important than the safety of our community. Upholding our Core Values of consent, freedom of sexual expression, authenticity, originality, and diversity is only possible through the continual efforts to ensure the safety of our users and our platform. Therefore, we have always been committed to preventing and eliminating illegal content, including non-consensual material and child sexual abuse material (CSAM). Every online platform has the moral responsibility to join this fight, and it requires collective action and constant vigilance.

Over the years, we have put in place robust measures to protect our platform from abuse. We are constantly improving our Trust and Safety policies to better identify, flag, remove, review and report illegal or abusive material. We are committed to doing all that we can to ensure a safe online experience for our users, which involves a concerted effort to innovate and always striving to do more.

As part of our efforts and dedication to Trust and Safety, we feel it is important to provide information about our Trust and Safety initiatives, technologies, moderation practices and how our platform responds to harmful content. This Transparency Report provides these insights and builds upon our inaugural report, published in 2021.

We are committed to being as transparent as possible and to being a leader in eradicating abusive content from the internet. We will continue to do our part to ensure Punchporn is free from any illegal content.

Trust and Safety Initiatives and Partnerships – 2021 Update

Our goal to achieve and set the highest level of Trust and Safety standards relies heavily on not only our internal policies and moderation team, but also on our array of external partnerships and technology. The organizations we partner with are part of the global effort to reduce illegal material online and help ensure our platform and adult users can feel safe.

In 2021, we continued to further our Trust and Safety efforts and are proud to have added new partnerships and initiatives. Below is a non-exhaustive list of partnerships and accomplishments achieved in 2021:

Thorn

In November 2020, we became the first adult content platform to partner with Thorn, allowing us to begin using its Safer product on Punchporn, adding an additional layer of protection in our robust compliance and content moderation process. Safer joins additional technologies such as CSAI Match, Content Safety API, PhotoDNA, and Vobile as protective measures that Punchporn utilizes to help protect visitors from unwanted or illegal material.

Deterrence Messaging for Non-Consensual Intimate Imagery

Since the launch of our CSAM deterrence messaging, we have embarked on a similar initiative for non-consensual material. This concerns searches for content where there is a lack of consent to sexual acts, the recording or distribution of the content, or manipulation of one’s image, commonly known as “deepfakes”. If users search for terms relating to non-consensual material, they are reminded that such material may be illegal. As part of the messaging, resources are provided for removal of non-consensual material and support for victims via our NGO partners.

ActiveFence

To expand on our efforts in identifying and responding to safety risks on Punchporn, we partnered with ActiveFence, a leading technology solution for Trust and Safety teams working to protect their online integrity and keep users safe. ActiveFence helps Punchporn keep our platform free of abuse by identifying bad actors, content that violates our platform’s policies, and mentions of the use of Punchporn for illegal or suspicious activity.

SpectrumAI

We have been working with Spectrum Labs AI to proactively surface and remove harmful text on our platforms. This drives productivity and accuracy of our internal moderation teams and tooling.

Additional Partnerships, Tools, and Initiatives

Trust and Safety Center

In the spring of 2020, we launched our Trust and Safety Center as part of our ongoing efforts to ensure the safety of our users and to clarify our policies. Our Trust and Safety Center outlines our Core Values and the kind of behavior and content that is—and is not—allowed on the platform. It also highlights important points of our Terms of Service by providing some Related Guidelines, and it shares some of our existing policies with respect to how we tackle violations through prevention, identification, and response.

Trusted Flagger Program

Launched in 2020, our Trusted Flagger Program is comprised of 44 members in the non-profit sector spanning over 30 countries, including the Internet Watch Foundation (UK), the Cyber Civil Rights Initiative (USA), End Child Prostitution and Trafficking (Sweden/Taiwan), and Point de Contact (France). This program allows hotlines, helplines, government agencies, and other trusted organizations to disable content automatically and instantly from Punchporn, without awaiting our internal review. It is a significant step forward in identifying, removing, and reporting harmful and illegal content.

Lucy Faithfull Foundation & Deterrence Messaging

The Lucy Faithfull Foundation is a widely respected non-profit organization at the forefront of the fight against child sex abuse, whose crucial efforts include a campaign designed to deter searches seeking or associated with underage content. We have actively worked with Lucy Faithfull to develop deterrence messaging for terms relating to child sexual abuse material (CSAM). As a result, attempts to search for certain words and terms now yield a clear deterrence message about the illegality of underage content, while offering a free, confidential, and anonymous support resource for those interested in seeking help for controlling their urges to engage in illegal behavior.

Yoti – Third-Party Identity Documentation Validation

Yoti is our trusted, third-party identity verification and documentation validation service provider. Yoti verifies the identity of users who apply to become verified uploaders before they can upload any content to our platform.

Trusted by governments and regulators around the world, as well as a wide range of commercial industries, Yoti deploys a combination of state-of-the-art AI technology, liveness anti-spoofing, and document authenticity checks to thoroughly verify the age and identity of any user. Yoti technology can handle millions of scans per day with over 240 million age scans performed in 2019. Yoti has received certification from the British Board of Film Classification’s age verification program and from the Age Check Certification Scheme, a United Kingdom Accreditation Service.

Users who verify their age with Yoti can trust that their personal data remains secure. Yoti is certified to meet the requirements of ISO/IEC 27001, which is the global gold standard for information security management. This means that Yoti’s security aligns with Punchporn’s commitment to protecting our users’ privacy as well. You can learn more about how Yoti works here.

Corporate Responsibility

Punchporn Cares is a philanthropic endeavor focused on furthering the charitable contributions of Punchporn as a whole. Starting with our “Save the Boobs” campaign in October 2012 aimed at raising awareness and funds for breast cancer, Punchporn has donated millions of dollars and raised awareness for important causes such as Black Lives Matter, Coronavirus relief efforts, intimate partner violence, animal welfare and the environment through philanthropic campaigns that engage our users. Over the years we have worked with and donated to well-recognized and respected organizations such as People for the Ethical Treatment of Animals (PETA), the National Association for the Advancement of Colored People (NAACP), the Center for Honeybee Research, Movember, and more, leveraging our 130 million daily visitors to increase awareness and donate funds to some very important causes.

The Punchporn Sexual Wellness Center (SWC) was launched in 2017 under the direction of esteemed sex therapist Dr. Laurie Betito as an online resource aiming to provide our audience with information and advice regarding sexuality, sexual health, and relationships. The SWC furthers Punchporn’s commitment to conveying unequivocally that pornography should NOT be a replacement for proper sex education—and that sex in porn is very different from sex in real life! The SWC features original editorial and video content on an assortment of topics from a diverse array of doctors, therapists, community leaders and experts.

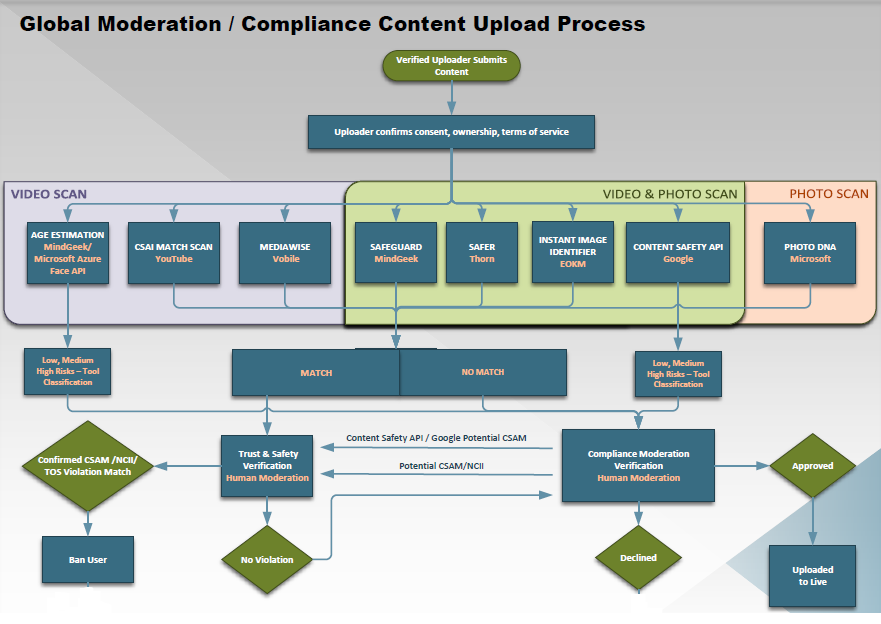

Cutting-Edge Automated Detection Technology

Punchporn deploys several different automated detection technologies to help moderate content before it can be published, including:

CSAI Match – YouTube’s proprietary technology for combating child sexual abuse imagery. All video content appearing on Punchporn has been scanned against YouTube’s CSAI Match. All new video content is scanned at the time of upload and before it can be published.

PhotoDNA – Microsoft’s technology that aids in finding and removing known images of child sexual abuse material. All photo content appearing on Punchporn has been scanned against Microsoft’s PhotoDNA. All new photo content is scanned at the time of upload and before it can be published.

Google’s Content Safety API – Google’s Content Safety API uses artificial intelligence to help companies better prioritize abuse material for review. The API steps up the fight for child safety by prioritizing potentially illegal content for human review and helping reviewers find and report content faster.

MediaWise – Vobile’s cyber “fingerprinting” software that scans all new user uploads to help prevent previously identified content from being re-uploaded.

Safer – In November 2020, we became the first adult content platform to partner with Thorn, allowing us to begin using its Safer product on Punchporn, adding an additional layer of protection in our robust compliance and content moderation process. Safer joins the list of technologies that Punchporn utilizes to help protect visitors from unwanted or illegal material.

Safeguard – Safeguard is Punchporn’s proprietary image recognition technology designed with the purpose of combatting both child sexual abuse imagery and non-consensual content, by preventing the re-uploading of previously fingerprinted content to our platform.

Age Estimation – We also utilize age estimation capabilities to analyze content uploaded to our platform using a combination of internal proprietary software and Microsoft Azure Face API in an effort to strengthen the varying methods we use to prevent the upload and publication of potential or actual CSAM.

Punchporn remains vigilant when it comes to researching, adopting, and using the latest and best available detection technology to help make it a safe platform, while remaining an inclusive, sex positive online space for adults.

Content Removals

A total of 3.6 million (1,965,648 videos and 1,700,802 photos) pieces of content were uploaded to Punchporn in 2021. Of these uploads, 71,907 (53,919 videos, 17,988 photos) were blocked at upload or removed for violating our Terms of Service.

An additional 102,051 pieces of content (54,815 videos, 47,236 photos) uploaded prior to 2021 were labeled as Terms of Service violations, for a total of 173,958 pieces of content (108,734 videos, 65,224 photos) removed during 2021 for violations of our Terms of Service.

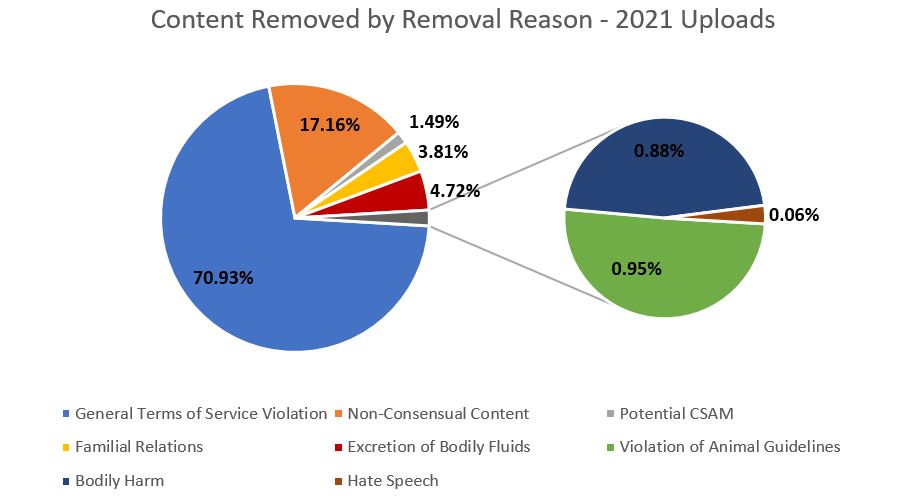

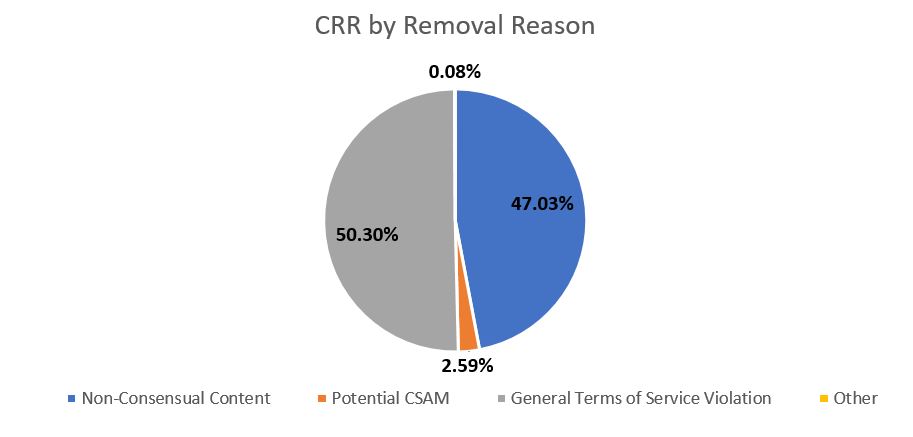

The above figure provides a breakdown of content both uploaded and removed in 2021 by removal reason. For additional information and explanations of each removal reason, please see below.

Non-Consensual Content (NCC)

During the reporting period, we removed 28,781 pieces of content (20,666 videos, 8,115 photos) for violating our NCC policy. When we identify or are made aware of the existence of non-consensual content on Punchporn, we remove the content, fingerprint it to prevent re-upload, and ban the user where appropriate.

Our NCC policy includes the following violations:

- Non-Consensual Content – Act(s): Content which depicts activity for which an individual did not validly consent. A non-consensual act is one involving the application of force directly or indirectly without the consent of the other person to a sexual act.

- Non-Consensual Content – Recording(s): The recording of sexually explicit activity without the consent of the featured person.

- Non-Consensual Content – Distribution: The distribution of sexually explicit content without the featured person’s permission.

- Non-Consensual Content – Manipulation: Also known as “deepfake,” this type of content appropriates a real person’s likeness without their consent.

Potential Child Sexual Abuse Material (CSAM)

We have a zero-tolerance policy toward any content that features a person (real or animated) that is under the age of 18. Through our use of cutting-edge CSAM detection technology, age and identify verification measures, as well as human moderators, we strive to quickly identify and remove CSAM and ban users who have uploaded this content. Any content that we identify or are made aware of being potential CSAM is also fingerprinted to prevent re-upload upon removal. We report all instances of potential CSAM to the National Center for Missing and Exploited Children (NCMEC).

In 2021, we made 9,029 reports to NCMEC. These reports contained 20,401 pieces of content (11,626 videos, 8,775 photos) which violated our CSAM Policy.

General Violations of Terms of Service

During the reporting period, we removed 111,111 pieces of content (64,061 videos, 47,050 photos) for general violations of our Terms of Service. When we identify or are made aware of the existence of content that violates our Terms of Service on Punchporn, we remove the content, fingerprint it to prevent re-upload, and, where appropriate, may ban the user.

Content may be removed for general violations of our Terms of Service, outside of any of the other reasons listed, for being objectionable or inappropriate for our platform. This also includes violations related to brand safety, defamation, and the use of our platform for sexual services.

Other Reasons for Removal

Hate Speech

We do not allow abusive or hateful comments or content of a racist, homophobic, transphobic, or otherwise derogatory nature intended to hurt or shame those individuals featured.

During the reporting period, we removed 77 pieces of content (50 videos, 27 photos) for violating our hate speech and violent speech policy.

Animal Guidelines

Content that shows or promotes harmful activity to animals, including exploiting an animal for sexual gratification, physical or psychological abuse of animals, is prohibited on our platforms.

During the reporting period, we removed 1,149 pieces of content (983 videos, 166 photos) for violating our Animal Guidelines.

Bodily Fluids

Content depicting blood, vomit, or feces, among others, is not permitted on Punchporn.

During the reporting period, we removed 4,484 pieces of content (3,770 videos, 714 photos) for violating our policy related to excretion of bodily fluids.

Familial Relations

We do not allow any content which depicts incest.

During the reporting period, we removed 6,772 pieces of content (6,520 videos, 252 photos) for violating our policy related to familial relations.

Bodily Harm

We prohibit excessive use of force that poses a risk of serious physical harm to any person appearing in the content or any physical or sexual activity with a corpse. This violation does not include forced sexual acts, as this content would fall under our non-consensual policy.

During the reporting period, we removed 1,183 pieces of content (1,058 videos, 125 photos) for violating our bodily harm and violent content policy.

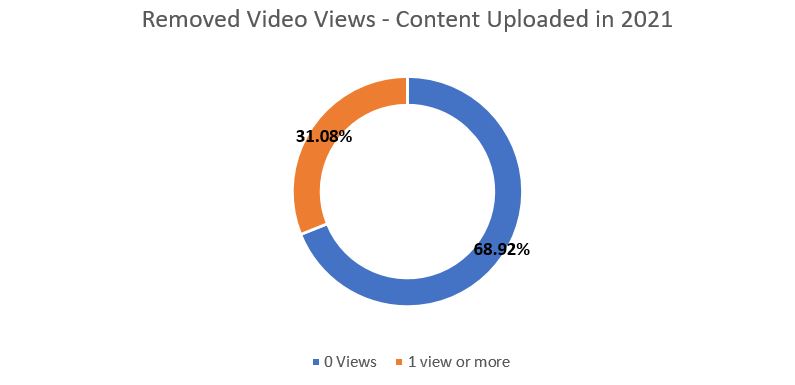

Removed Video Views

Given additional verification measures introduced in late 2020 and ongoing enhancements of moderation processes, we feel that it is important to evaluate the impact that these efforts have had in preventing and responding to abuse on our platform. An ongoing goal of our moderation efforts is to identify as much unwanted content as possible at the time of upload before appearing live on Punchporn.

The above figure shows the number of times videos uploaded in 2021 were viewed prior to their removal for Terms of Service Violations.

Analysis: 68.92% of removed videos were identified and removed by our human moderators or internal detection tools before going live on our platform.

Moderation and Content Removal Process

Upload and Moderation Process

As Punchporn strives to prevent abuse on our platform, all content is reviewed upon upload by our trained staff of moderators before it is ever made live on our site. Uploads are also automatically scanned against several external automated detection technologies as well as our internal tool for fingerprinting and identifying violative content, Safeguard. Furthermore, only verified users can upload content to our platform, after passing verification measures through Yoti.

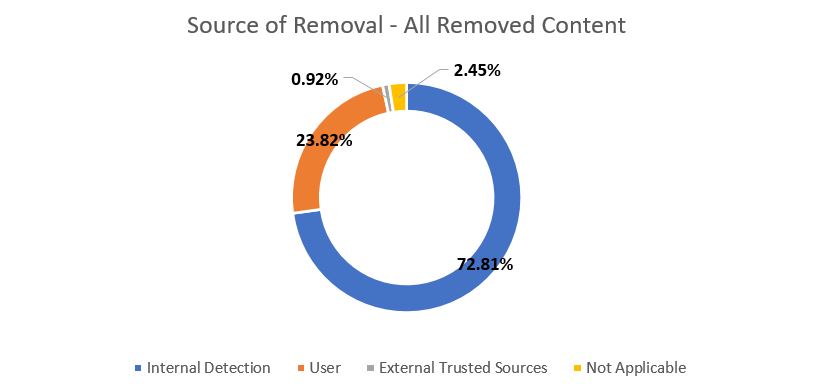

Content Removal Process

Users may alert us to content that may be illegal or otherwise violates our Terms of Service by using the flagging feature or by filling out our Content Removal Request form.

While users can notify us of potentially abusive content, we also invest a great deal into internal detection, which includes technology and a 24/7 team of moderators, who can detect content that may violate our Terms of Service at time of upload and before it is published. Most of this unwanted content is discovered via our internal detection methods.

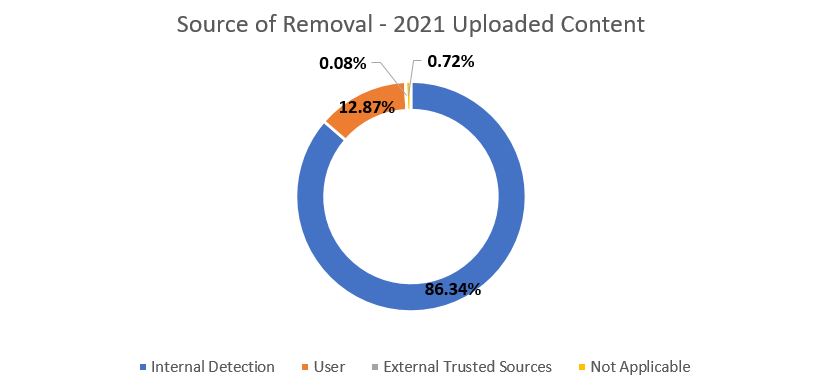

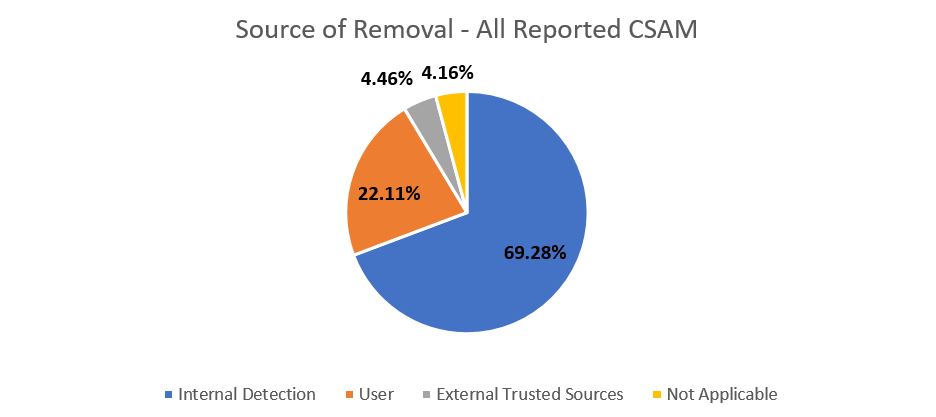

The above figure shows a breakdown of removals based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers. The content with a removal source of “Not Applicable” was removed prior to having detailed tracking of removal sources.

Analysis: Of all the content that was removed for a TOS violation in 2021, 72.81% of content was identified via Punchporn’s internal methods of moderation, compared to 23.82% of content being alerted to our team from our users.

As we add technologies and new moderation practices, our goal is to ensure that our moderation team is identifying as much violative content as possible before it is published. This is paramount to ensuring our platform is free of abuse and for creating a safe environment for our users. From content uploaded in 2021, 86.34% of removals were the result of Punchporn’s internal detection methods.

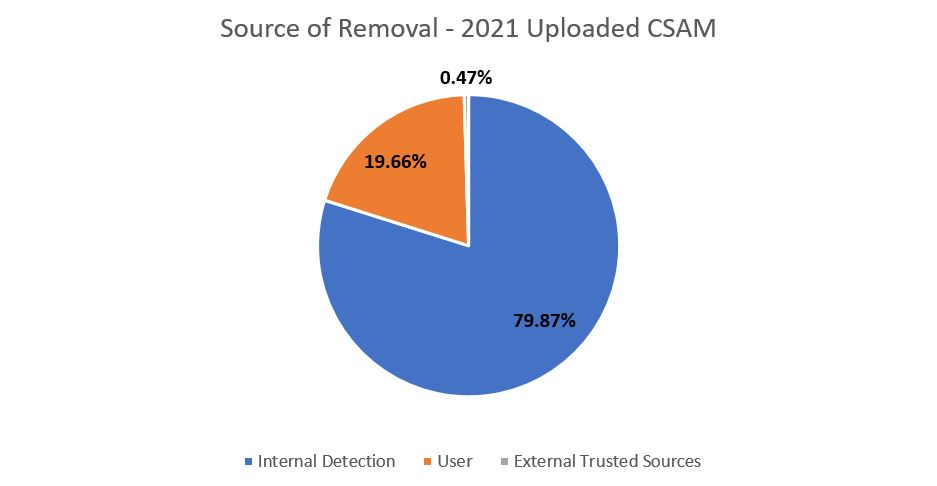

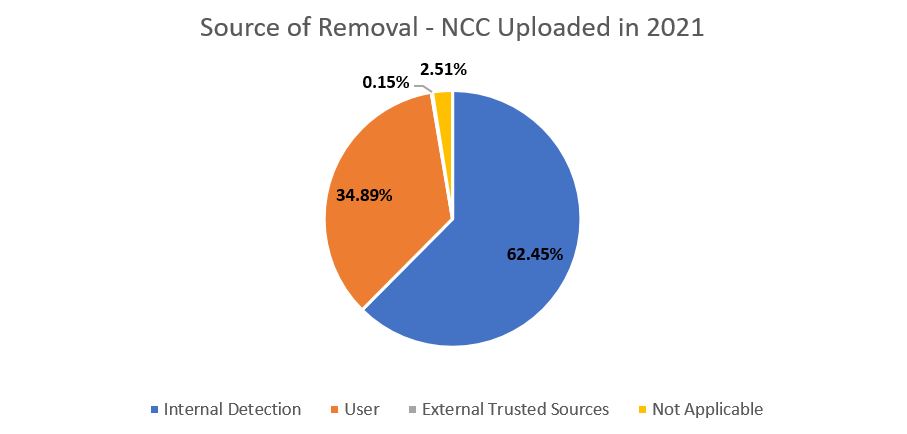

The above figure shows a breakdown of removals that were uploaded in 2021 based on the source of detection. See above for further information about the sources of removal.

Analysis: Of all the content that was uploaded and removed for a TOS violation in 2021, 86.34% of content was identified via Punchporn’s internal methods of moderation, compared to 12.87% of content being alerted to our team from our users.

User-Initiated Removals

While we strive to remove offending content before it is ever available on our platform, users may also flag content or submit a content removal request to alert us to potentially harmful or inappropriate content. These features are available to prevent abuse on the platform, assist victims in quickly removing content, and to foster a safe environment for our adult community.

Users may alert us to content that may be illegal or otherwise violate our Terms of Service by using the flagging feature found below all videos and photos, or by filling out our Content Removal Request form. Flagging and CRRs are kept confidential, and we review all content that is brought to our attention by users through these means.

User-based removals accounted for 12.87% of all removals for content uploaded and removed in 2021. After internal detection, user-based removals account for the largest percentage of removals overall, and specifically when identifying non-consensual content.

User Flags

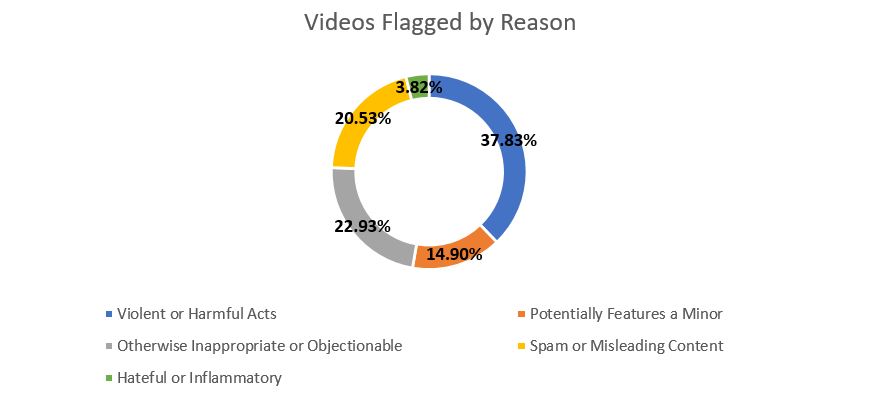

Users may flag content to bring it to the attention of our moderation team for the following reasons: Potentially Features a Minor, Violent or Harmful Acts, Hateful or Inflammatory, Spam or Misleading Content or Otherwise Objectionable or Inappropriate.

In 2021, content reported using the flagging feature was most often for “Violent or Harmful Acts” and “Otherwise Inappropriate or Objectionable”, which accounts for 60.76% of flags.

The figure above shows the breakdown of flagged videos received from users in 2021.

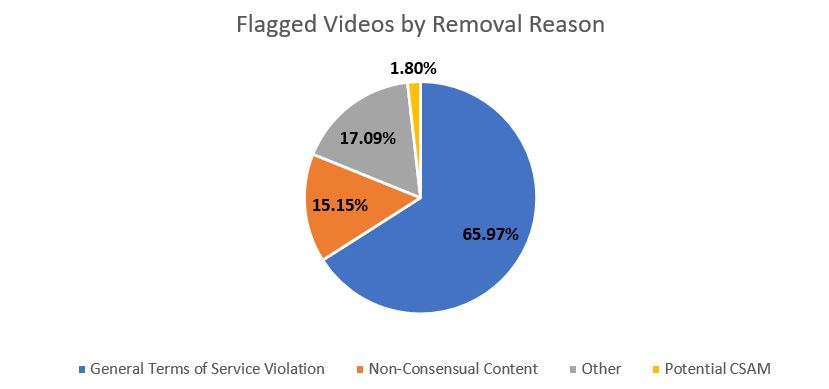

Upon receiving flags on videos and other content, our moderation team reviews the content and removes it if it violates our Terms of Service. Of the content removed after being flagged, most were removed due to general violations of our Terms of Service.

In addition to flagging content, users may also flag comments, users, and verified uploaders.

The figure above shows a breakdown of reasons of why a video was removed after it had been flagged by a user. After review by our moderation team, most of the content removed was due to general Terms of Service violations.

Analysis: In the figure titled, “Videos Flagged by Reason”, the second largest category selected by users was “Otherwise Inappropriate or Objectionable”, which correlates to general Terms of Service Violations. General Terms of Service violations accounted for most flagged videos that were removed.

Content Removal Requests

Upon receipt of a Content Removal Request (CRR), the content is immediately suspended from public view pending investigation. This is an important step in assisting victims and ensuring that our platform stays free of abusive content. All requests are reviewed by our moderation team, who work to ensure that any content that violates our Terms of Service is quickly and permanently removed. In addition to being removed, any time non-consensual recordings or distribution are identified, content is digitally fingerprinted with both Mediawise and Safeguard, to prevent re-upload. In accordance with our policies, uploaders of non-consensual content or CSAM will also be banned. All content identified as CSAM is reported to the National Center for Missing and Exploited Children (NCMEC).

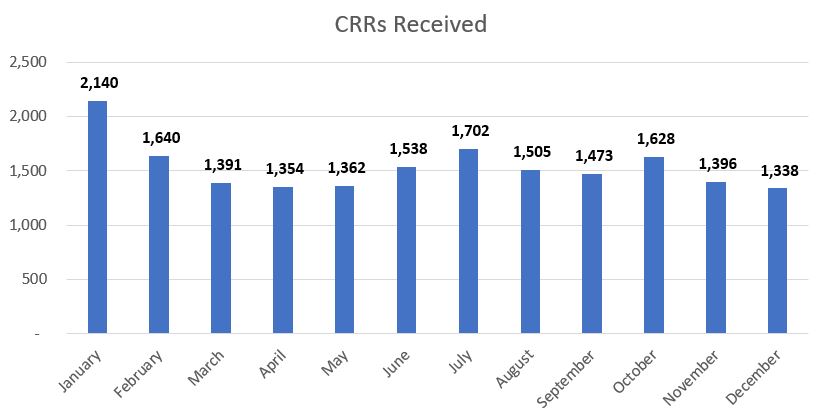

In 2021, Punchporn received a total of 18,467 Content Removal Requests.

The figure above shows the number of Content Removal Requests received by Punchporn per month in 2021.

Of the content removed due to Content Removal Requests, the majority was removed for a General Terms of Service Violation.

The figure above displays the breakdown of removal reasons for content that was removed because of Content Removal Requests.

Analysis: 47.03% of content that was removed due to a CRR was due to non-consensual content, which is second to general Terms of Service violations.

Combatting Child Sexual Abuse Material

We have a firm zero-tolerance policy against underage content, as well as the exploitation of minors in any capacity.

Punchporn is against the creation and dissemination of child sexual abuse material (CSAM), as well as the abuse and trafficking of children. CSAM is one of many examples of illegal content that is prohibited by our Terms of Service and related guidelines.

Punchporn is proud to be one of the 1,400+ companies registered with the National Center for Missing and Exploited Children (NCMEC) ESP Program. We have made it a point to not only ensure our policies and moderation practices are aligned with global expectations on child exploitation, but also to publicly announce our commitment and support to continue to adhere to the Voluntary Principles to Counter Online Child Sexual Exploitation and Abuse.

Child sexual abuse material that is detected through moderation efforts or reported to us by our users and other external sources is reported to NCMEC. For the purposes of our CSAM Policy and our reporting to NCMEC, we deem a child to be a human, real or fictional, who is or appears to be below the age of 18. Any content that features a child engaged in sexually explicit conduct, lascivious or lewd exhibition is deemed CSAM for the purposes of our Child Sexual Abuse Material Policy.

How We Work to Prevent CSAM

Punchporn deploys several different automated detection technologies to help moderate content before it can be published, including:

- CSAI Match – YouTube’s proprietary technology for combating child sexual abuse imagery. All video content appearing on Punchporn has been scanned against YouTube’s CSAI Match. All new video content is scanned at the time of upload and before it can be published.

- PhotoDNA – Microsoft’s technology that aids in finding and removing known images of child sexual abuse material. All photo content appearing on Punchporn has been scanned against Microsoft’s PhotoDNA. All new photo content is scanned at the time of upload and before it can be published.

- Google’s Content Safety API – Google’s Content Safety API uses artificial intelligence to help companies better prioritize abuse material for review. The API steps up the fight for child safety by prioritizing potentially illegal content for human review and helping reviewers find and report content faster.

- MediaWise – Vobile’s cyber “fingerprinting” software that scans all new user uploads to help prevent previously identified content from being re-uploaded.

- Safer – In November 2020, we became the first adult content platform to partner with Thorn, allowing us to begin using its Safer product on Punchporn, adding an additional layer of protection in our robust compliance and content moderation process. Safer joins the list of technologies that Punchporn utilizes to help protect visitors from unwanted or illegal material.

- Safeguard – Safeguard is Punchporn’s proprietary image recognition technology designed with the purpose of combatting both child sexual abuse imagery and non-consensual content, by preventing the re-uploading of previously fingerprinted content to our platform.

- Age Estimation – We also utilize age estimation capabilities to analyze content uploaded to our platform using a combination of internal proprietary software and Microsoft Azure Face API in an effort to strengthen the varying methods we use to prevent the upload and publication of potential or actual CSAM.

In addition to these tools, ALL content is reviewed at the time of upload and prior to publishing by our team of trained moderators, and we remove and report any content we deem to be potential CSAM.

Removed CSAM Content

In 2021, we made 9,029 reports to the National Center for Missing and Exploited Children (NCMEC). These reports contained 20,401 pieces of content (11,626 videos, 8,775 photos) that were identified as potential CSAM.

As with total content removals that violated our Terms of Service in 2021, internal detection was the greatest source of identifying potential CSAM.

The above figure shows a breakdown of reported CSAM based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers. The content with a removal source of “Not Applicable” was reported prior to having detailed tracking of removal sources.

Analysis: Of all the content that was reported as potential CSAM in 2021, including content uploaded prior to 2021, 69.28% of content was identified via Punchporn’s internal methods of moderation, compared to 22.11% of content being alerted to our team from our users.

For 2021 uploaded content that was reported as CSAM, 79.87% was identified through our internal detection methods.

The above figure shows a breakdown of reported CSAM based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers. No reported CSAM content uploaded in 2021 had a removal source of “Not Applicable”.

Analysis: Of the content that was both uploaded and reported as potential CSAM in 2021, 79.87% of content was identified via Punchporn’s internal methods of moderation, compared to 19.66% of content being alerted to our team from our users.

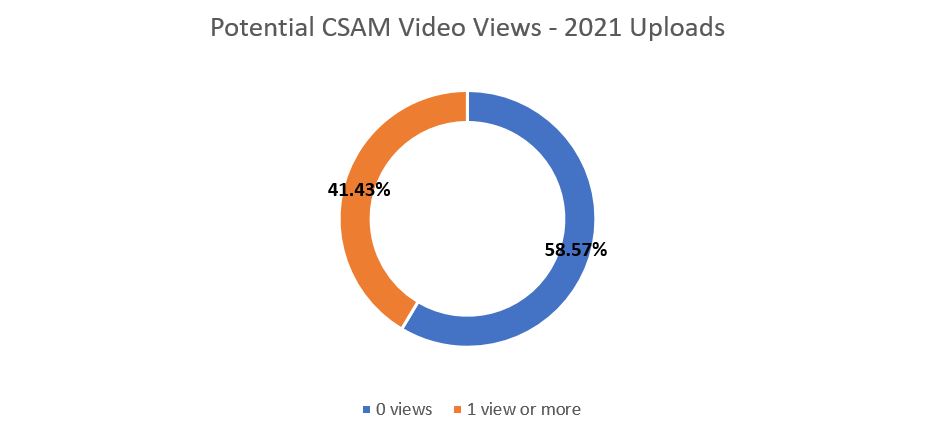

As part of our efforts to identify and quickly remove CSAM and other harmful material, we strive to ensure this content is removed before it is available to users and consumers. Of the videos uploaded and reported as CSAM in 2021, which represents .04% of videos uploaded in 2021, 58.57% were removed before being viewed.

The above figure shows the number of times videos uploaded in 2021 were viewed prior to being reported as potential CSAM.

Analysis: 58.57% of reported videos were identified and removed by our human moderators or internal detection tools before being viewed on our platform.

Non-Consensual Content

Punchporn is an adult content-hosting and sharing platform for consenting adult use only. We have a zero-tolerance policy against non-consensual content (NCC).

Non-consensual content not only encompasses what is commonly referred to as “revenge pornography” or image-based abuse, but also includes any content which involves non-consensual act(s), the recording of sexual material without consent of the person(s) featured, or any content which uses a person’s likeness without their consent.

We are steadfast in our commitment to protecting the safety of our users and the integrity of our platform; we stand with all victims of non-consensual content.

In 2021, we removed 28,781 pieces of content (20,666 videos, 8,115 photos) for violating our NCC policy.

Most of the content uploaded in 2021 and removed for a violation of our NCC policy was identified through internal detection, at 62.45%.

The above figure shows a breakdown of NCC removals that were uploaded in 2021 based on the source of detection. See above for further information about the sources of removal. The content with a removal source of “Not Applicable” was reported prior to having detailed tracking of removal sources.

Analysis: Of all the content that was uploaded and removed for violating our NCC policy in 2021, 62.45% of content was identified via Punchporn’s internal methods of moderation, compared to 34.89% of content being alerted to our team from our users.

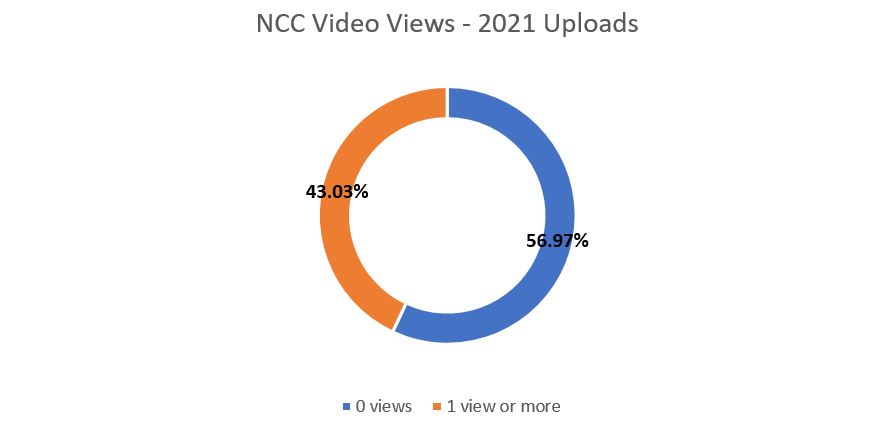

As part of our efforts to identify and quickly remove non-consensual content and other harmful material, we strive to ensure this content is removed before it is available to users and consumers. Of videos both uploaded and removed for violating our NCC policy in 2021, 56.97% were removed before being viewed.

The above figure shows the number of times videos uploaded in 2021 were viewed prior to being removed for violating our NCC policy.

Analysis: 56.97% of videos were identified and removed by our human moderators or internal detection tools before going live on our platform.

DMCA

The Digital Millennium Copyright Protection Act, known as the DMCA, is a U.S. law that limits the liability of online service providers for the copyright infringement caused by their users. To qualify, online service providers must do —and not do — certain things. The most well-known aspects of the DMCA are its requirements that a service provider remove materials after it receives a notice of infringement and have (and implement) a policy to deter repeat infringement. Punchporn promptly responds to requests relating to copyright notifications.

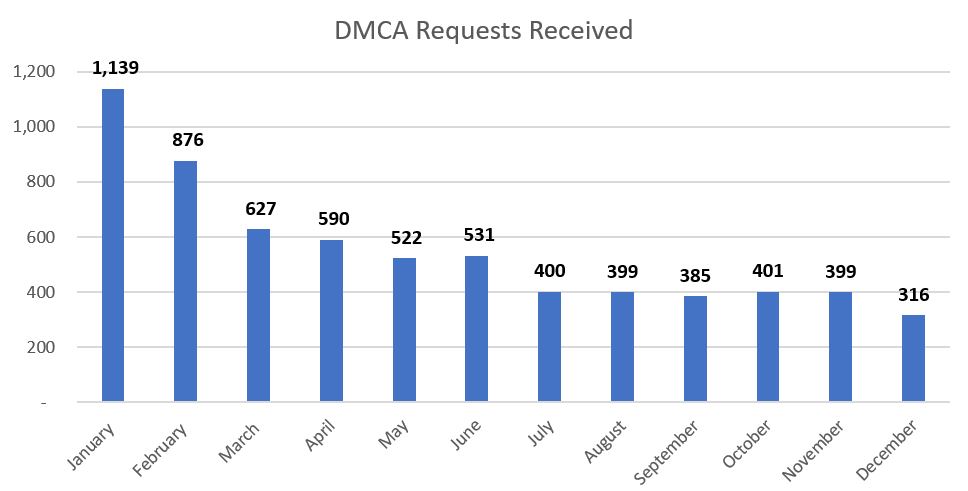

In addition to blocking uploads of works previously fingerprinted with Mediawise or Safeguard from appearing on Punchporn, in 2021, we responded to 6,585 requests for removal, leading to a total of 8,547 pieces of content removed.

The above figure shows the number of requests for removal related to DMCA per month in 2021.

Cooperation with Law Enforcement

We cooperate with law enforcement globally and readily provide all information available to us upon request and receipt of appropriate documentation and authorization. Legal requests can be submitted by governments and law enforcement, as well as private parties.

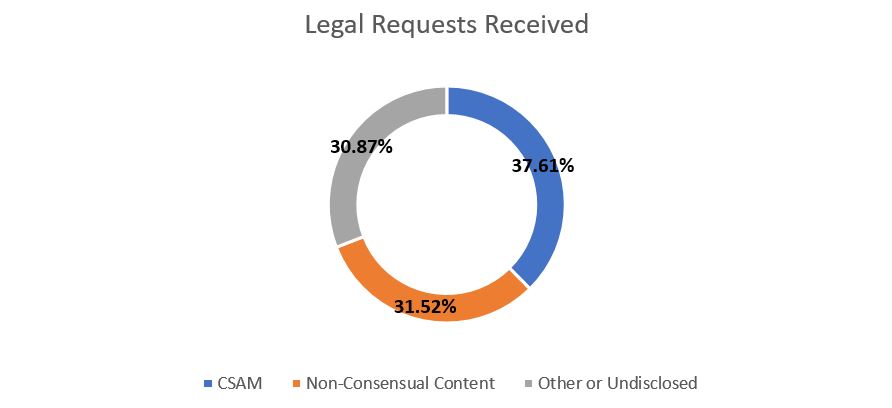

In 2021, we responded to 460 legal requests. A breakdown by request type as indicated by law enforcement is provided below.

The above figure displays the number of requests Punchporn received from law enforcement and legal entities in 2021 broken down by the type of request as indicated by the requester.

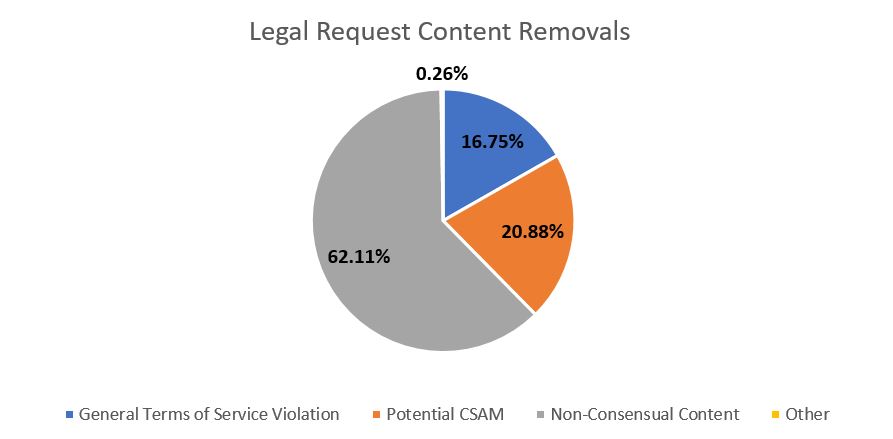

As a result of these requests, we classified a total of 388 pieces of content (192 videos, 196 photos) as Terms of Service Violations due to legal requests. A breakdown by removal type is provided below.

The above figure displays photos and videos that were identified in law enforcement requests that we deemed to violate our Terms of Service upon review.