For Punchporn, nothing is more important than the safety of our community. Upholding our core values of consent, freedom of sexual expression, authenticity, originality, and diversity is only possible through the continual efforts to ensure the safety of our users and our platform. Consequently, we have always been committed to preventing and eliminating illegal content, including non-consensual material and child sexual abuse material (CSAM). Every online platform has the moral responsibility to join this fight, and it requires collective action and constant vigilance.

Over the years, we have put in place robust measures to protect our platform from abuse. We are constantly improving our Trust and Safety policies to better identify, flag, remove, review, and report illegal or abusive material. We are committed to doing all that we can to ensure a safe online experience for our users, which involves a concerted effort to innovate and always striving to do more.

As part of our efforts and dedication to Trust and Safety, we feel it is important to provide information about our Trust and Safety initiatives, technologies, moderation practices and how our platform responds to harmful content. This Transparency Report provides these insights and builds upon our previous reports. Further information can be found in other sections of our Trust and Safety Center, including resources about our community, keeping our platform safe, and our policies.

We are committed to being as transparent as possible and to being a leader in eradicating abusive content from the internet. We will continue to do our part to create a safe space for our users.

Trust and Safety Initiatives and Partnerships – 2024 First-Half Update

Our goal to achieve and set the highest level of Trust and Safety standards relies heavily on not only our internal policies and moderation team, but also on our array of external partnerships and technology. The organizations we partner with are part of the global effort to reduce illegal material online and help ensure our platform and adult users can feel safe.

We continued to further our Trust and Safety efforts and are proud to have added new partnerships and initiatives. Below is a non-exhaustive list of these, for the period from January 1, 2024 through June 30, 2024:

Co-Performer Verification Requirements

All members of our Model Program are required to maintain identity documentation and consent to record and distribute for every performer appearing in their content. Beginning in January 2024, all members of the model program are required to upload identity documentation and consent to record and distribute for all new performers seen in their content prior to publication of the content.

The Internet Watch Foundation (IWF) Partnership

The IWF is a technology-led, child protection organization, making the internet a safer place for children and adults across the world. They are one of the largest child protection organizations globally.

In November 2022, we announced a partnership with the IWF overseen by an Expert Advisory Board including the British Board of Film Classification, SWGfL, Aylo, Marie Collins Foundation, the Home Office, PA Consulting, National Crime Agency (NCA) and academia represented by Middlesex University and Exeter University, to:

- Develop a model of good practice to guide the adult industry in combatting child sexual abuse imagery online;

- Evaluate the effectiveness of IWF services when deployed across Aylo’s brands;

- Combine technical and engineering expertise to scope and develop solutions which will assist with the detection, disruption and removal of child sexual abuse material online.

In May 2024, Aylo and the IWF published the world’s first standards of good practice for adult content sites – Aylo and IWF partnership ‘paves the way’ for adult sites to join war on child sexual abuse online

Lucy Faithfull Foundation (Stop it Now UK & Ireland) & the IWF

In September 2022 we launched a first of its kind Chatbot on Punchporn in the UK in collaboration with the IWF and Stop It Now – IWF, Stop It Now and Punchporn launch chatbot. The chatbot engages with users on Punchporn who attempt to search for sexual imagery of children.

The results were independently evaluated by the University of Tasmania and published in February 2024. They showed that the Chabot was successful in reducing the number of searches for child sexual abuse material.

Age Determination – Thorn, Teleperformance & ICMEC

In May 2024 Teleperformance published a paper on age determination that we co-authored with Thorn, Teleperformance and the International Centre for Missing and Exploited Children (ICMEC). Age Determination – A Guide for Online Platforms that Feature User-Generated Content (teleperformance.com)

We provided insights from our in-house moderation team in co-writing the paper which explores the intricate process of age determination within user-generated content on online platforms, shedding light on the pivotal role it plays in enforcing content policies, particularly safeguarding minors.

Crimestoppers International (CSI)

In January 2024 we announced our working relationship with Crime Stoppers International with a shared goal to enhance the cooperation and collaboration between the online adult industry, civil society and law enforcement in combatting online harms and exploitation.

For a full list of Trust and Safety partnerships, initiatives and external technology, please refer to our Trust and Safety Initiatives page.

Content Removals

A total of 1.4 million (869,196 videos and 536,211 photos) pieces of content were uploaded to Punchporn in 2024 from January through June. Of these uploads, 91,789 (34,074 videos and 57,715 photos) were blocked at upload or removed for violating our Terms of Service.

An additional 333,036 pieces of content (32,512 videos and 300,524 photos) uploaded prior to January 2024 were removed and labeled as Terms of Service violations, for a total of 424,825 pieces of content (66,586 videos and 358,239 photos) removed during the six-month period ended June 30, 2024, for violations of our Terms of Service.

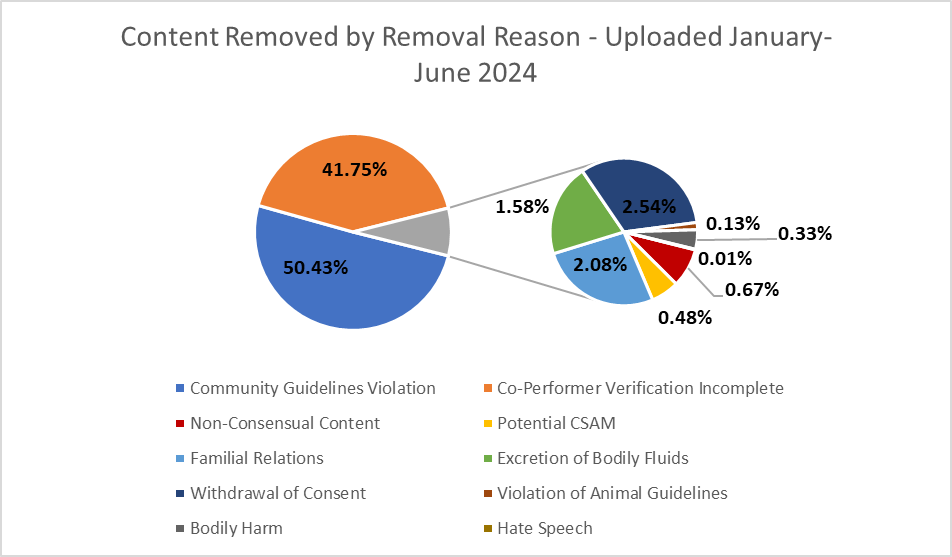

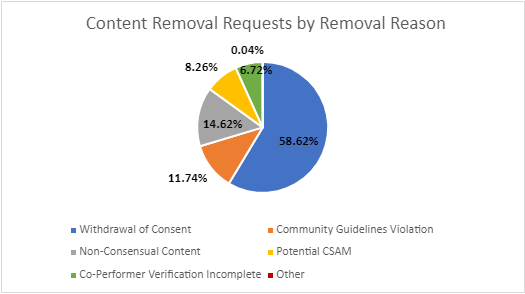

The above figure provides a breakdown, by removal reason, of content uploaded and removed in the first six months of 2024. For additional information and explanations of each removal reason, please see below.

Non-Consensual Content (NCC)

In the first half of 2024, we removed 8,549 pieces of content (7,014 videos and 1,535 photos) for violating our NCC policy. When we identify or are made aware of the existence of non-consensual content on Punchporn, we remove the content, fingerprint it to prevent re-upload, and ban the user in accordance with our Terms of Service.

Our NCC policy includes the following violations:

- Non-Consensual Content – Act(s): Content which depicts activity for which an individual did not validly consent. A non-consensual act is one involving the application of force directly or indirectly without the consent of the other person to a sexual act.

- Non-Consensual Content – Recording(s): The recording of sexually explicit activity without the consent of the featured person.

- Non-Consensual Content – Distribution: The distribution of sexually explicit content without the featured person’s permission.

- Non-Consensual Content – Manipulation: Also known as “deepfake,” this type of content appropriates a real person’s likeness without their consent. Violations of this policy include any content that is AI-generated, modified, or otherwise manipulates a person’s image, whether in picture or video, to deceive or mislead the viewer into believing that person is acting or speaking in the way presented.

Potential Child Sexual Abuse Material (CSAM)

We have a zero-tolerance policy toward any content that features a person (real or animated) that is under the age of 18. Through our use of cutting-edge CSAM detection technology, identity verification measures, as well as human moderators, we strive to quickly identify and remove potential CSAM and ban users who have uploaded this content. Any content that we identify as or are made aware of being potential CSAM is also fingerprinted to prevent re-upload upon removal. We report all instances of potential CSAM to the National Center for Missing and Exploited Children (NCMEC).

In the first six months of 2024, we made 1,450 reports to NCMEC. These reports contained 3,759 pieces of content (3,089 videos and 670 photos) which were reported for violating our CSAM Policy.

Community Guidelines Violations

In the first half of 2024, we removed 170,195 pieces of content (38,347 videos and 131,848 photos) for violating our Community Guidelines. When we identify or are made aware of the existence of content that violates our Community Guidelines on Punchporn, we remove the content, may fingerprint it to prevent re-upload, and, where appropriate, may ban the user.

A portion of these removals are related to requests from individuals who previously consented to the distribution of content they appear in but have submitted a request to retract that consent. Individuals who wish to retract their consent may reach out to us via our Content Removal Request form.

Content may be removed for Community Guidelines violations, outside of any of the other reasons listed, for being objectionable or inappropriate for our platform. This also includes violations related to brand safety, defamation, and the use of our platform for sexual services.

Other Reasons for Removal

Hate Speech

We do not allow abusive or hateful content or comments of a racist, homophobic, transphobic, or otherwise derogatory nature intended to hurt or shame those individuals featured.

From January through June, we removed 14 pieces of content (10 videos and 4 photos) for violating our hate speech and violent speech policy.

Animal Guidelines

Content that shows or promotes harmful activity to animals, including exploiting an animal for sexual gratification, physical or psychological abuse of animals, is prohibited on our platform.

In the first six months of 2024, we removed 216 pieces of content (120 videos and 96 photos) for violating our Animal Guidelines.

Bodily Fluids

Content depicting vomit, or feces, among others, is not permitted on Punchporn.

In the first half of 2024, we removed 1,857 pieces of content (1,646 videos and 211 photos) for violating our policy related to excretion of non-compliant bodily fluids.

Familial Relations

We do not allow any content which depicts incest.

During the six-month period ending June 30, 2024, we removed 2,275 pieces of content (2,267 videos and 8 photos) for violating our policy related to familial relations.

Bodily Harm

We prohibit excessive use of force that poses a risk of serious physical harm to any person appearing in the content. This violation does not include forced sexual acts, as this type of content would be addressed with our non-consensual policy.

In the first six months of 2024, we removed 438 pieces of content (370 videos and 68 photos) for violating our bodily harm and violent content policy.

Co-Performer Verification Incomplete

We require uploaders to maintain valid documentation for any other individuals appearing in their content. We may, and do from time to time, for various reasons require uploaders to provide us with a complete set of the documents that uploaders are required to maintain (and that they represent to us they do) for the purposes of verifying the identification of a co-performer. Failure to do so, or the provision of incomplete or inconclusive verification documents may lead to the removal of content and the banning of the uploader.

In the first half of 2024, we removed 237,522 pieces of content (13,723 videos and 223,799 photos) for having incomplete verification of the co-performers depicted.

Content Uploaded in January Through June, 2024

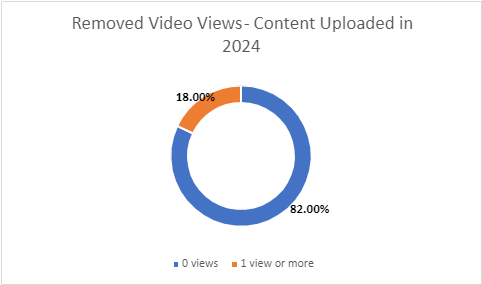

An ongoing goal of our moderation efforts is to identify as much non-compliant content as possible at the time of upload before appearing live on Punchporn.

Videos uploaded in the first half of 2024 that were removed for violating our Terms of Service accounted for 0.76% of total views of videos uploaded during that period.

The above figure shows the number of times videos uploaded between January through June 2024 were viewed prior to their removal for Terms of Service Violations.

Analysis: Eighty two percent of removed videos were identified and removed by our human moderators or internal detection tools before being viewed on our platform.

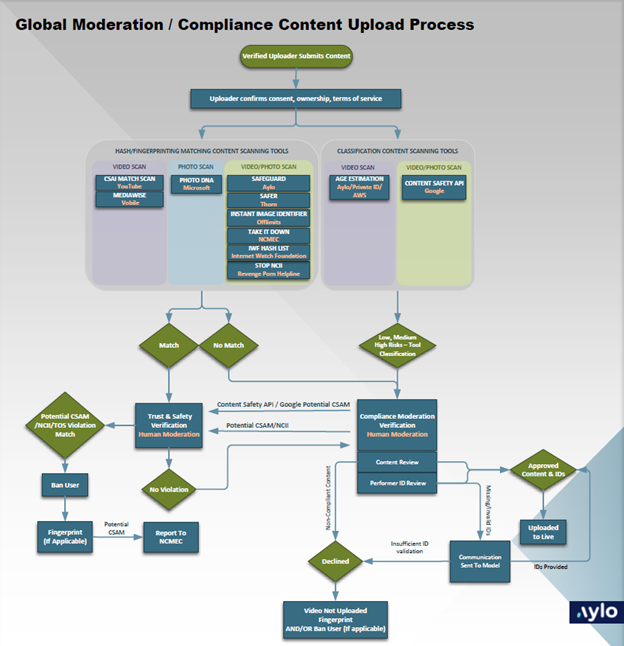

Moderation and Content Removal Process

Upload and Moderation Process

As Punchporn strives to prevent abusive material on our platform, only verified uploaders, including content partners and members of the Model Program can upload content to our site. All members of the Model Program are required to verify their identity via a third-party identity documentation validation and identity verification service provider. Once verified, all content is reviewed upon upload by our trained staff of moderators before it is ever made live on our site. Uploads are also automatically scanned against several external automated detection technologies as well as our internal tool for fingerprinting and identifying violative content, Safeguard.

Content Removal Process

Users may alert us to content that may be illegal or otherwise violates our Terms of Service by using the flagging feature or by filling out our Content Removal Request form.

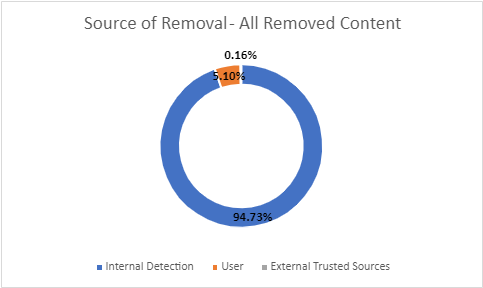

While users can notify us of potentially abusive content, we also invest a great deal into internal detection, which includes technology and a 24/7 team of moderators, who can detect content that may violate our Terms of Service at time of upload and before it is published. Most of this non-compliant content is discovered via our internal detection methods.

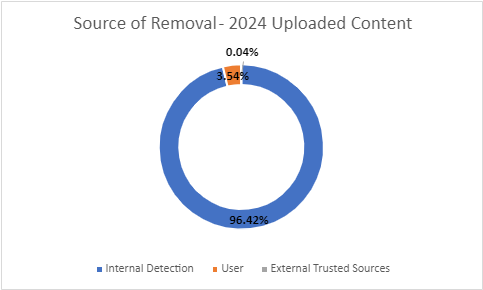

The above figure shows a breakdown of removals based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers.

Analysis: Of all the content that was removed for a TOS violation in the first six months of 2024, almost 95% of content was identified via Punchporn’s internal methods of moderation, compared to 5% of content being alerted to our team from our users.

As we add technologies and new moderation practices, our goal is to ensure that our moderation team is identifying as much violative content as possible before it is published. This is paramount to combatting abusive material and for creating a safe environment for our users. From content uploaded during the first six months of 2024, 96.42% of removals were the result of Punchporn’s internal detection methods.

The above figure shows a breakdown of removals that were uploaded in the first half of 2024 based on the source of detection. See above for further information about the sources of removal.

Analysis: Of all the content that was uploaded and removed for a TOS violation during the first six months of 2024, 96.42% of content was identified via Punchporn’s internal methods of moderation, compared to 3.54% of content being alerted to our team from our users.

User-Initiated Removals

While we endeavor to remove offending content before it is ever available on our platform, our users play an important role in alerting us to content that may violate our Terms of Service. Users may bring this content to our attention by flagging content or submitting a content removal request. These features are available to prevent abuse on the platform, assist victims in quickly removing content, and to foster a safe environment for our adult community.

Users may alert us to content that may be illegal or otherwise violate our Terms of Service by using the flagging feature found below all videos and photos, or by filling out our Content Removal Request form. Flagging and Content Removal Requests are kept confidential, and we review all content that is brought to our attention by users through these means.

User-based removals accounted for 3.54% of all removals for content uploaded and removed in the first six months of 2024. After internal detection, user-based removals account for the largest percentage of removals overall, and specifically when identifying non-consensual content.

User Flags

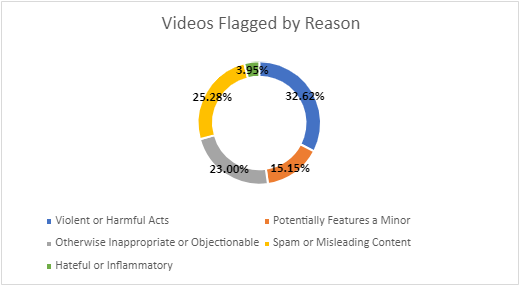

Users may flag content to bring it to the attention of our moderation team for the following reasons: Potentially Features a Minor, Violent or Harmful Acts, Hateful or Inflammatory, Spam or Misleading Content or Otherwise Objectionable or Inappropriate.

From January through June, content reported using the flagging feature was most often for “Violent or Harmful Acts” and “Spam or Misleading Content”, which accounts for nearly 58% of flags.

The figure above shows the breakdown of flagged videos received from users during the six-month period ending June 30, 2024.

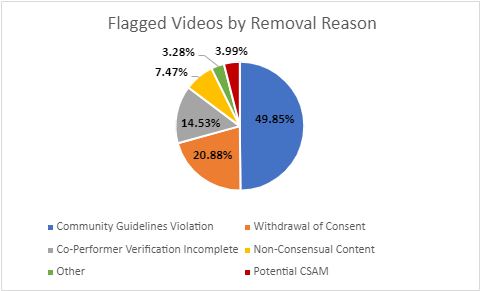

Upon receiving flags on videos and other content, our moderation team reviews the content and removes it if it violates our Terms of Service. Of the content removed after being flagged, most were removed due to Community Guidelines violations.

In addition to flagging content, users may also flag comments, users, and verified uploaders.

The figure above shows a breakdown of reasons of why a video was removed after it had been flagged by a user. After review by our moderation team, most of the content removed was due to Community Guidelines violations.

Analysis: In the figure titled, “Videos Flagged by Reason”, the second largest category selected by users was “Spam or Misleading Content”, which correlates to Community Guidelines violation. Community Guidelines violations accounted for nearly 50% of flagged videos that were removed.

Content Removal Requests

Upon submitting a Content Removal Request with email confirmation, the content is immediately suspended from public view pending investigation. This is an important step in assisting victims and addressing abusive content. Content removal requests relating to CSAM may also be reported anonymously through a separate reporting form. All requests are reviewed by our moderation team, who work to ensure that any content that violates our Terms of Service is quickly and permanently removed. In addition to being removed, abusive content is digitally fingerprinted with both Mediawise and Safeguard, to prevent re-upload. In accordance with our policies, uploaders of non-consensual content or potential CSAM will also be banned. All content identified as potential CSAM is reported to the National Center for Missing and Exploited Children (NCMEC).

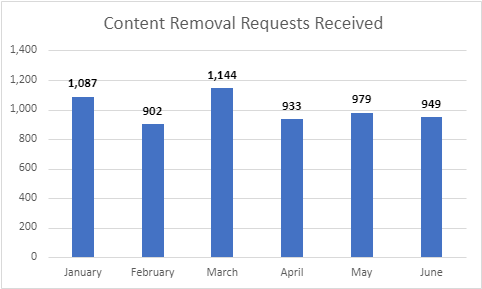

During the first six months of 2024, Punchporn received a total of 5,994 Content Removal Requests.

The figure above shows the number of Content Removal Requests received by Punchporn per month in the first half of 2024.

Of the content removed due to Content Removal Requests, the majority was removed for Withdrawal of Consent.

The figure above displays the breakdown of removal reasons for content that was removed due to Content Removal Requests.

Analysis: More than half of the content that was removed due to a Content Removal Request was due to Withdrawal of Consent, compared to 14.62% for Non-Consensual Content.

Combatting Child Sexual Abuse Material

We have a zero-tolerance policy against underage content, as well as the exploitation of minors in any capacity.

Punchporn is against the creation and dissemination of child sexual abuse material (CSAM), as well as the abuse and trafficking of children. CSAM is one of many examples of illegal content that is prohibited by our Terms of Service and related guidelines.

Punchporn is proud to be one of the 1,400+ companies registered with the National Center for Missing and Exploited Children (NCMEC) Electronic Service Provider (ESP) Program. We have made it a point to not only ensure our policies and moderation practices are aligned with global expectations on child exploitation, but also to publicly announce our commitment and support to continue to adhere to the Voluntary Principles to Counter Online Child Sexual Exploitation and Abuse.

Potential child sexual abuse material that is detected through moderation efforts, including detection through various internal and external tools listed here, or reported to us by our users and other external sources is reported to NCMEC. For the purposes of our CSAM Policy and our reporting to NCMEC, we deem a child to be a human, real or fictional, who is or appears to be below the age of 18. Any content that features a child engaged in sexually explicit conduct, lascivious or lewd exhibition is deemed CSAM for the purposes of our Child Sexual Abuse Material Policy.

Reported Potential CSAM

In the first six months of 2024, we made 1,450 reports to the National Center for Missing and Exploited Children (NCMEC). These reports contained 3,759 pieces of content (3,089 videos and 670 photos) that were identified as potential CSAM.

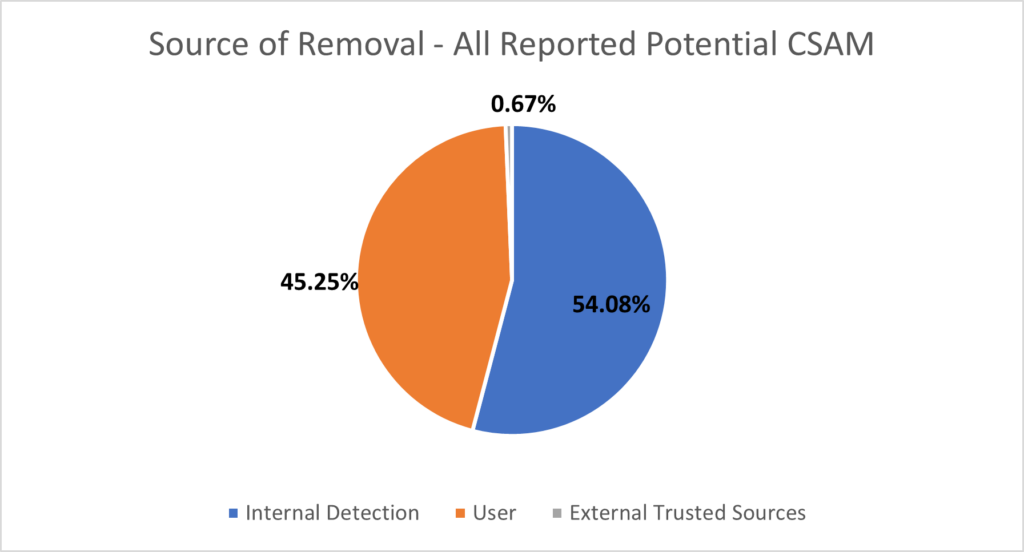

Internal detection was the greatest source of identifying potential CSAM that was removed in the first half of 2024.

The above figure shows a breakdown of reported potential CSAM based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers.

Analysis: Of all the content that was reported as potential CSAM during the six-month period ending June 30, 2024, including content uploaded prior to 2024, over 54% of content was identified via Punchporn’s internal methods of moderation, compared to 45.25% of content being alerted to our team from our users.

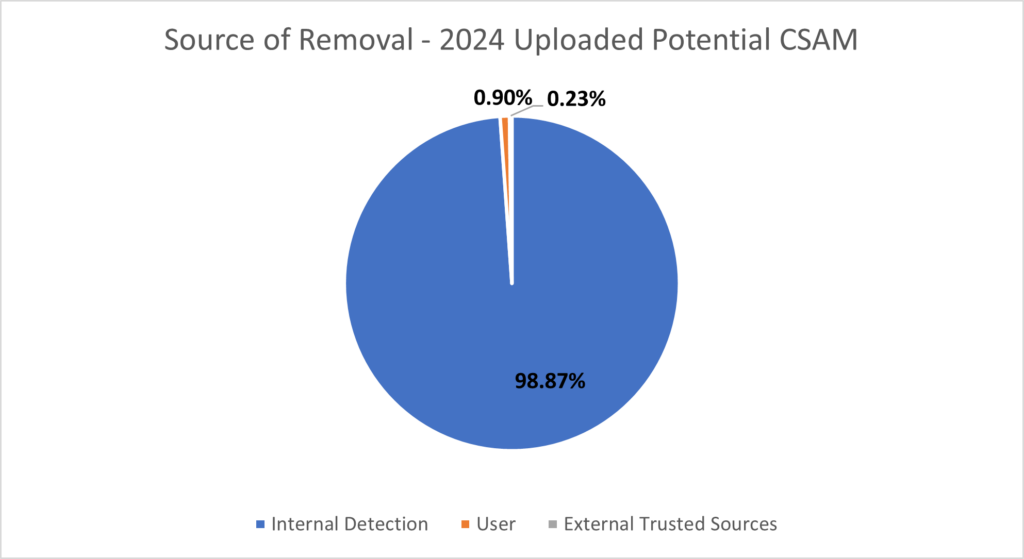

For content uploaded between January through June that was reported as potential CSAM, 98.87% was identified through our internal detection methods.

The above figure shows a breakdown of reported potential CSAM based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers.

Analysis: Of the content that was both uploaded and reported as potential CSAM in the first half of 2024, nearly all content was identified via Punchporn’s internal methods of moderation, compared to the remainder of content being alerted to our team from our users and external trusted sources.

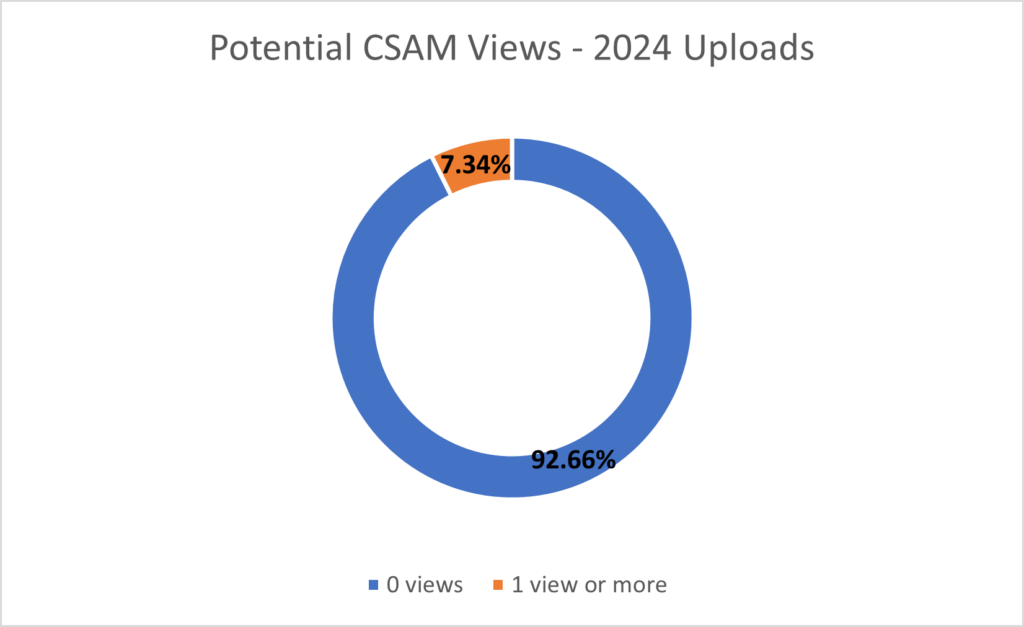

As part of our efforts to identify and quickly remove potential CSAM and other harmful material, we strive to ensure this content is removed before it is available to users and consumers. Of the videos uploaded and reported as potential CSAM in the first half of 2024, which represents 0.04% of videos uploaded during that period, 92.66% were removed before being viewed.

Videos uploaded in the first six months of 2024 and removed for violating our CSAM policy accounted for 0.002% of total views of all videos uploaded in that period.

The above figure shows the number of times videos uploaded in the first six months of 2024 were viewed prior to being reported as potential CSAM.

Analysis: Over 90% of reported videos were identified and removed by our human moderators or internal detection tools before being viewed on our platform.

Non-Consensual Content

Punchporn is an adult content-hosting and sharing platform for consenting adult use only. We have a zero-tolerance policy against non-consensual content (NCC).

Non-consensual content not only encompasses what is commonly referred to as “revenge pornography” or image-based abuse, but also includes any content which involves non-consensual act(s), the recording of sexual material without consent of the person(s) featured, or any content which uses a person’s likeness without their consent.

We are steadfast in our commitment to protecting the safety of our users and the integrity of our platform; we stand with all victims of non-consensual content.

From January through June, we removed 8,549 pieces of content (7,014 videos and 1,535 photos) for violating our NCC policy.

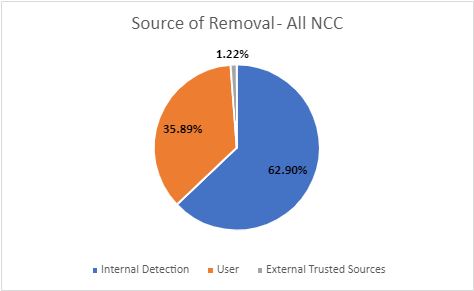

For all content removed for violating our NCC policy in the first six months of 2024, the majority of this content was identified through internal detection, at 62.90%.

The above figure shows a breakdown of NCC removals based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers.

Analysis: Of all the content that was removed for violating our NCC policy in the first six months of 2024, including content uploaded prior to January 2024, nearly 63% of content was identified via Punchporn’s internal methods of moderation, compared to nearly 36% of content being alerted to our team from our users.

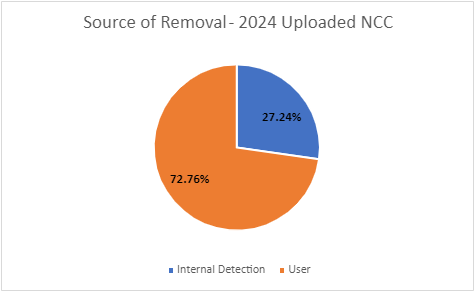

Most of the content uploaded in the first half of 2024 and removed for a violation of our NCC policy was identified through users, at 72.76%.

The above figure shows a breakdown of NCC removals that were uploaded during the six-month period ending June 30, 2024, based on the source of detection. See above for further information about the sources of removal.

Analysis: Of all the content that was uploaded and removed for violating our NCC policy during the first six months of 2024, 27.24% of content was identified via Punchporn’s internal methods of moderation, compared to 72.76% of content being alerted to our team from our users. None of this content was removed due to notifications from External Trusted Sources.

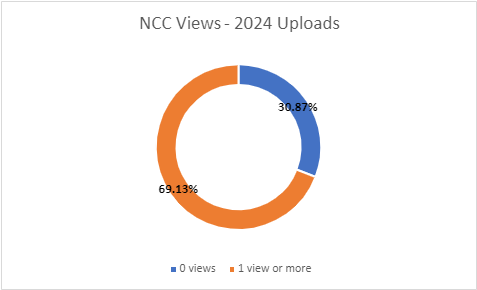

As part of our efforts to identify and quickly remove non-consensual content and other harmful material, we try to ensure this content is removed before it is available to users and consumers. Of videos both uploaded and removed for violating our NCC policy in 2024 from January through June, which represents 0.06% of videos uploaded during this time, nearly 31% were removed before being viewed.

Videos uploaded in the first six months of 2024 and removed for violating our NCC policy accounted for 0.005% of total views of all videos uploaded in the first half of 2024.

The above figure shows the number of times videos uploaded in the first six months of 2024 were viewed prior to being removed for violating our NCC policy.

Analysis: Nearly 31% of videos were identified and removed by our human moderators or internal detection tools before going live on our platform.

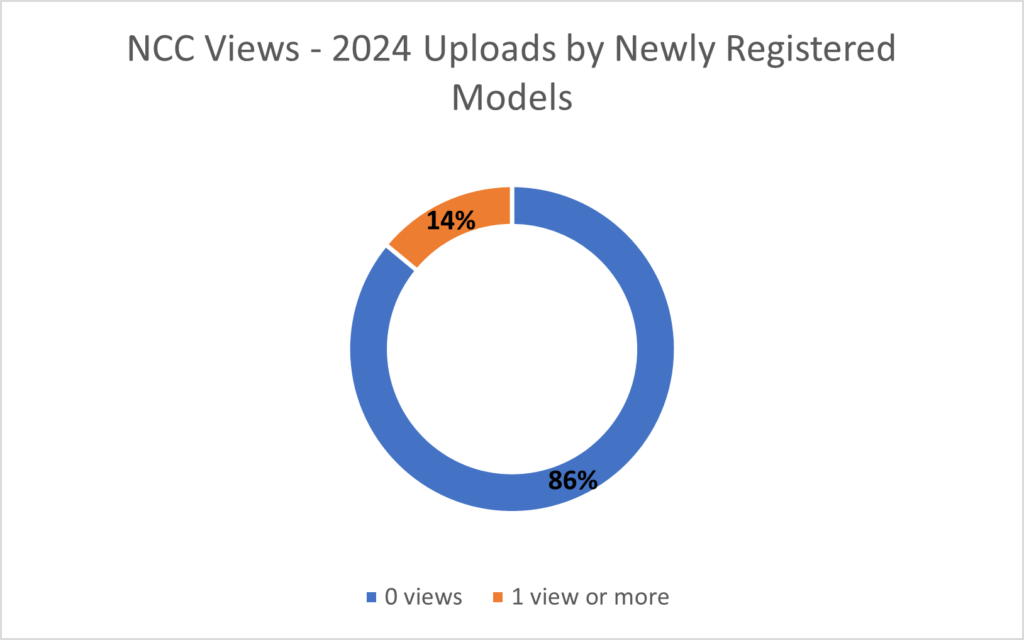

We have continued to strengthen our policies surrounding uploader requirements for providing identity verification and consent documentation for all performers in content. As of November 2023, all newly registered members of the Model Program must submit this identity and consent documentation for every performer before their content can be published on Punchporn.

Of the content uploaded and removed from January through June 2024 due to violating our NCC policy, the portion of these videos uploaded by members of the Model Program that have joined since November 2023 show a higher rate of removal prior to going live, as seen below.

The above figure shows a breakdown of NCC removals that were uploaded during the six-month period ending June 30, 2024, by models registered on or after November 23, 2023.

Analysis: Of the videos related to newly registered models, 86% were removed before going live on our platform, compared to a removal rate of 31% for all videos uploaded and removed during this time for violating our NCC policy.

Banned Accounts

As part of our efforts to ensure user and platform safety, we ban accounts that have committed serious or repeated violations of our Terms of Service. Additionally, accounts that have violated our CSAM Policy are reported to the National Center for Missing and Exploited Children (NCMEC).

In the first six months of 2024, we banned a total of 30,085 user and model accounts for violating our Terms of Service. This includes accounts that were banned for uploading content that was removed, as well as accounts that were banned for violations not related to content, such as spamming. Additionally, an account may be banned at the time of verification if we detect that the account belongs to an individual whose previous account was banned for violating our Terms of Service.

The above figure shows the breakdown by ban reason for accounts that were banned in 2024 from January through June.

Analysis: Nearly 61% of accounts were banned for a Community Guidelines violation, while 7% of accounts were banned, as they were identified as belonging to an individual whose previous account(s) was/were banned.

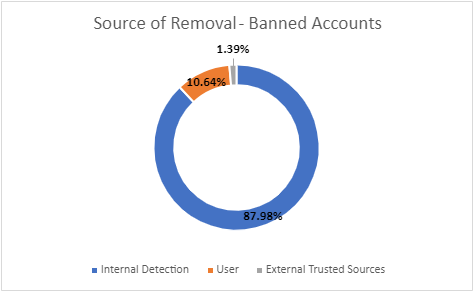

The majority of accounts banned during this period were identified by our internal detection methods.

The above figure shows a breakdown of banned accounts based on the source of detection. In addition to internal and user-sourced removals, removals due to External Trusted Sources include legal requests and notifications from our Trusted Flaggers.

Analysis: Of the accounts that were banned in the first six months of 2024, nearly 88% were identified due to internal detection methods, while user notifications accounted for 10.64% of banned accounts.

DMCA

The Digital Millennium Copyright Protection Act, known as the DMCA, is a U.S. law that limits the liability of online service providers for the copyright infringement caused by their users. To qualify, online service providers must do —and not do — certain things. The most well-known aspects of the DMCA are its requirements that a service provider remove materials after it receives a notice of infringement and have (and implement) a policy to deter repeat infringement. Punchporn promptly responds to requests relating to copyright notifications.

In addition to blocking uploads of works previously fingerprinted with Mediawise or Safeguard from appearing on Punchporn, during the six-month period ending June 30,2024, we responded to 515 requests for removal, leading to a total of 2,019 pieces of content (1,535 videos and 484 photos) removed.

The above figure shows the number of requests for removal related to DMCA per month in the first half of 2024.

Cooperation with Law Enforcement

We cooperate with law enforcement globally and readily provide all information available to us upon request and receipt of appropriate documentation and authorization. Legal requests can be submitted by governments and law enforcement, as well as private parties.

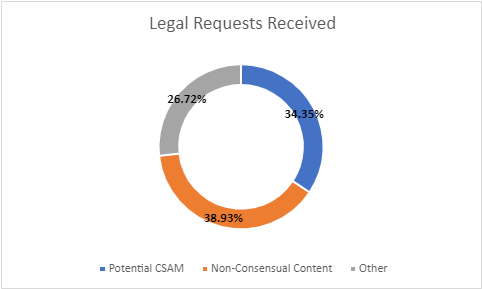

In the first half of 2024, we responded to 131 legal requests. A breakdown by request type as indicated by law enforcement is provided below.

The above figure displays the number of requests Punchporn received from law enforcement and legal entities in the first six months of 2024, broken down by the type of request as indicated by the requester.

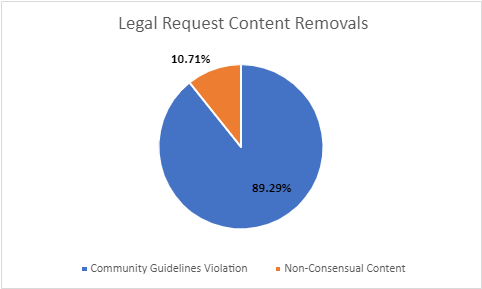

As a result of these requests, we classified a total of 28 pieces of content (all videos) as Terms of Service Violations due to legal requests. A breakdown by removal type is provided below.

The above figure displays content that was identified in law enforcement requests that we deemed to violate our Terms of Service upon review.

Content De-Indexing

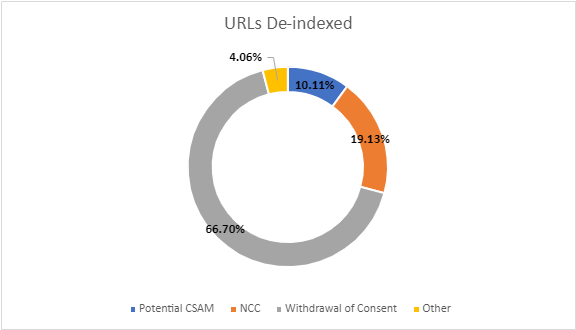

As part of our Trust and Safety efforts to remove abusive content not only from our platform, but globally, we request de-indexing of URLs that were removed for violating our Terms of Service from search engines. We proactively seek to de-index all content that is removed for potential CSAM and non-consensual content.

In the first half of 2024, we requested de-indexing of 27,383 URLs for 15,185 unique pieces of content.

The above figure displays a breakdown of the reason for URLs that were de-indexed from search engines. There may be several URLs de-indexed per piece of content, as we request the de-indexing of every version of the URL (e.g. it.Punchporn.com/123 would be a URL de-indexed for the content located at www.Punchporn.com/123).