Name of the service provider

Aylo Freesites Ltd

Date of the publication of the report

30 August 2024

Service

Punchporn

Reporting period

The following report covers the reporting period of 17 February 2024 to 30 June 2024.

Orders from authorities (Art. 15(1)(a) DSA)

The below table represents the number of orders by law enforcement for immediate removal, per country and type.

| Country | Non-Consensual Content | Total Orders |

| Austria | – | – |

| Belgium | – | – |

| Bulgaria | – | – |

| Croatia | – | – |

| Cyprus | – | – |

| Czech Republic (Czechia) | – | – |

| Denmark | – | – |

| Estonia | – | – |

| Finland | – | – |

| France | – | – |

| Germany | – | – |

| Greece | 1 | 1 |

| Hungary | – | – |

| Ireland | – | – |

| Italy | – | – |

| Latvia | – | – |

| Lithuania | – | – |

| Luxembourg | – | – |

| Malta | – | – |

| Netherlands | – | – |

| Poland | – | – |

| Portugal | – | – |

| Romania | – | – |

| Slovakia | – | – |

| Slovenia | – | – |

| Spain | – | – |

| Sweden | – | – |

| Totals | 1 | 1 |

We provide an immediate automated response to acknowledge receipt.

The median time to process these requests once full information was received from law enforcement was 12 hours. This does not include the time to intake or follow-up on these requests as needed. We typically provide completed information to law enforcement within 5 business days of receipt, during which time the content and/or account in question is disabled, where appropriate.

The table below indicates the number of information requests from law enforcement relating to individuals/users per country and type.

| Country | Child Sexual Abuse Material | Non-Consensual Content | Scams and/or Fraud | Risk for Public Security | Total Number of Requests |

| Austria | – | – | – | – | – |

| Belgium | – | – | – | – | – |

| Bulgaria | – | – | – | – | – |

| Croatia | – | – | – | – | – |

| Cyprus | – | – | – | – | – |

| Czech Republic (Czechia) | – | – | – | – | – |

| Denmark | 5 | 1 | 3 | 1 | 10 |

| Estonia | – | – | – | – | – |

| Finland | – | – | – | – | – |

| France | – | 1 | – | – | 1 |

| Germany | – | – | – | – | – |

| Greece | – | 3 | 4 | – | 7 |

| Hungary | – | 1 | – | – | 1 |

| Ireland | – | – | 1 | – | 1 |

| Italy | – | – | – | – | – |

| Latvia | – | – | 2 | – | 2 |

| Lithuania | – | – | – | – | – |

| Luxembourg | – | – | – | – | – |

| Malta | – | – | – | – | – |

| Netherlands | – | – | – | – | – |

| Poland | – | – | – | – | – |

| Portugal | 1 | – | – | – | 1 |

| Romania | – | – | – | – | – |

| Slovakia | – | – | 2 | – | 2 |

| Slovenia | – | – | – | – | – |

| Spain | – | – | – | – | – |

| Sweden | 1 | – | – | – | 1 |

| Totals | 7 | 6 | 12 | 1 | 26 |

We provide an automated response to acknowledge receipt.

The median time to process these requests once full information was received from law enforcement was 12 hours. This does not include the time to intake or follow-up on these requests as needed. We typically provide completed information to law enforcement within 5 business days of receipt, during which time the content and/or account in question is disabled, where appropriate.

User notices (Art. 15(1)(b) DSA)

Note that the figures provided in this section are for the total number of notices received. A notice may list one or several pieces of content, and one piece of content could be flagged several times.

Content reported by users

The table below indicates the number of user notices submitted by users through all available notification channels on Punchporn, including content removal requests (CRRs) and content flags.

| Type of potential violation | Total |

| Potential Child Sexual Abuse Material | 6,097 |

| Non-Consensual Content | 1,351 |

| Illegal or Harmful Speech | 1,304 |

| Content in violation of the platform’s terms and conditions | 25,324 |

| Intellectual property infringements | 1,254 |

| Total | 35,330 |

DSA Trusted Flaggers

We did not receive any removal requests from DSA Trusted Flaggers during the reporting period.

Actions taken on user reports

The table below indicates the number of pieces of content removed on the basis of user notices.

| Reason for Removal | Total |

| Content in violation of the platform’s terms and conditions | 2,229 |

| Non-Consensual Behavior | 1,547 |

| Potential Child Sexual Abuse Material | 324 |

| Animal Welfare | 2 |

| Bodily Harm/Violence | 4 |

| Intellectual Property Infringements | 1,546 |

| Withdrawal of consent | 6,488 |

| Total | 12,140 |

Notices processed by automated means

All notices are processed by our human moderation team, and we do not utilize automated measures for any requests. Note that content is immediately suspended from public view when reported via our content removal request form, prior to human review, provided that the submitter has validated their email address. If after diligent human review, no illegality or incompatibility with our terms and conditions should be confirmed, the content is reinstated.

Median resolution time

| Reporting source | Time |

| Median Time – Content removal request form | 0.12 days |

| Median Time – Content flags | 0.25 days |

| Median Time – Copyright infringement form | 2.86 days |

Content moderation (Art. 15(1)(c) DSA) & Automated content moderation (Art. 15(1)(e) DSA)

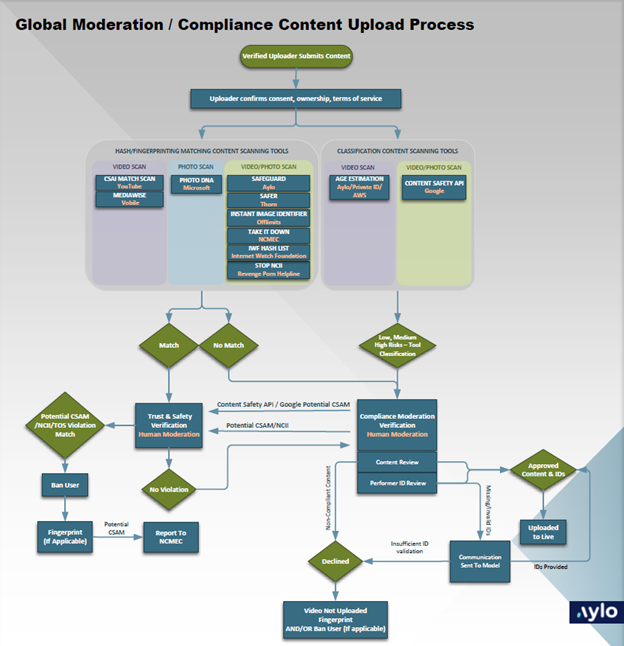

We use a combination of automated tools, artificial intelligence, and human review to help protect our community from illegal content. While all content available on the platform is reviewed by human moderators prior to publishing, we also have additional layers of moderation which audit material on our live platform for any potential violations of our Terms of Service.

The accuracy of content moderation is largely unaffected by Member State language due to our extensive use of automated tools and human moderation. Internal statistics show no significant differences between languages. Offenses are largely language independent.

Automated tools are used to help inform human moderators in making a manual decision. When an applicable automated tool detects a match between an uploaded piece of content to one in a hash list of previously identified illegal material, and that match is confirmed, the content is removed prior to reaching a moderator.

Automated Tools

Punchporn’s content moderation process includes an extensive team of human moderators dedicated to reviewing every single upload before it is published, a thorough system for flagging, reviewing, and removing illegal material, parental controls, and the utilization of a variety of automated detection technologies for known and previously identified, or potentially inappropriate content. Specifically:

Hash-list tools – known illegal material

We use a variety of tools that scan incoming images and videos against hash-lists provided by NGOs. If there is a match, then content is blocked before publication.

- CSAI Match: YouTube’s proprietary technology for combating Child Sexual Abuse Imagery online.

- PhotoDNA: Microsoft’s technology that aids in finding and removing known images of child exploitation.

- Safer: In November 2020, we became the first adult content platform to partner with Thorn, allowing us to begin using its Safer product on Punchporn, adding an additional layer of protection in our robust compliance and content moderation process. Safer joins the list of technologies that Punchporn utilizes to help protect visitors from unwanted or illegal material.

- Instant Image Identifier: The Centre for Expertise on Online Sexual Child Abuse (Offlimits) tool, commissioned by the European Commission, detects known child abuse imagery using a triple verified database.

- NCMEC Hash Sharing: NCMEC’s database of known CSAM hashes, including hashes submitted by individuals who fingerprinted their own underage content via NCMEC’s Take It Down service.

- StopNCII.org: A global initiative (developed by Meta & SWGfL) that prevents the spread of non-consensual intimate images (NCII) online. If any adult (18+) is concerned about their intimate images (or videos) being shared online without consent, they can create a digital fingerprint of their own material and prevent it from being shared across participating platforms.

- Internet Watch Foundation (IWF) Hash List: IWF’s database of known CSAM, sourced from hotline reports and the UK Home Office’s Child Abuse Image Database.

AI tools – unknown illegal material

We utilise several tools that use AI to estimate the ages of performers. The output from these tools assists content moderators in their decision allow publication of uploaded content. Specifically:

- Google Content Safety API: Google’s artificial intelligence tool that helps detect illegal imagery.

- Age Estimation: We also utilize age estimation capabilities to analyze content uploaded to our platform using a combination of internal proprietary software and external technology, provided by AWS and PrivateID to strengthen the varying methods we use to prevent the upload and publication of potential or actual CSAM.

Fingerprinting tools

In addition to hashes received from NGOs, we also use fingerprint databases to prevent previously prohibited material from being re-uploaded. Images and videos removed during the moderation process, or subsequently removed post publication are fingerprinted using the following tools to prevent re-publication. Content may also be proactively fingerprinted with these tools.

- Safeguard: Safeguard is Aylo’s proprietary image recognition technology designed with the purpose of combatting both child sexual abuse imagery and non-consensual content, by preventing the re-uploading of previously fingerprinted content to our platform.

- MediaWise: Vobile’s fingerprinting software that scans any new uploads for potential matches to unauthorized materials to protect previously fingerprinted videos from being uploaded/re-uploaded to the platform.

Moderation / Compliance Content Upload Process

The below chart shows our moderation/compliance process from account creation to publication.

Accuracy & Safeguards

Whilst automated tools assist in screening for, and detecting illegal material, uploaded images and videos cannot be published without being reviewed and approved by our trained staff of moderators. This acts as a quality control mechanism and safeguard for the automated systems.

Video removals from internal moderation

The table below provides the number of videos removed* on the basis of proactive voluntary measures (internal moderation, internal tools, internal audit), broken down by type of removal and total.

| Reason for Removal | Total |

| Content in violation of the platform’s terms and conditions | 29,267 |

| Non-Consensual Behavior | 350 |

| Potential Child Sexual Abuse Material | 2,804 |

| Animal Welfare | 93 |

| Bodily Harm/Violence | 271 |

| Illegal or Harmful Speech | 6 |

| Total | 32,791 |

* Removals in this section may include content already removed in a previous period and reclassified to a different reason code during this reporting because of internal auditing.

Image removals from internal moderation

The table below indicates images removed due to internal means (internal moderation, internal tools, internal audit) broken down by type of removal and total.

| Reason for Removal | Pieces of Content |

| Content in violation of the platform’s terms and conditions | 317,519 |

| Non-Consensual Behavior | 384 |

| Potential Child Sexual Abuse Material | 642 |

| Animal Welfare | 86 |

| Bodily Harm/Violence | 64 |

| Illegal or Harmful Speech | 3 |

| Total | 318,698 |

Manual vs automated removals from internal moderation

The table below indicates the pieces of content removed by internal means, broken down by automated (tools) and manual (internal moderation, internal audit). Automated decisions are where an exact binary match is achieved through one of our hashing-tools against known illegal material. Manual decisions are where a human has made a decision with or without the help of assisting tools.

| Type of Content | Total |

| Videos – Automated | 335 |

| Videos – Manual | 32,456 |

| Photos – Automated | 6 |

| Photos – Manual | 318,692 |

| Total | 351,489 |

User restrictions

The table below indicates the number of users banned based on the source of removal.

| Reason for Removal | Total |

| Age-specific restrictions concerning minors | 720 |

| Animal Welfare | 6 |

| Content in violation of the platform’s terms and conditions | 16,690 |

| Goods/services not permitted to be offered on the platform | 285 |

| Illegal or harmful speech | 53 |

| Impersonation or account hijacking | 1 |

| Inauthentic accounts | 2,421 |

| Non-consensual image sharing | 509 |

| Potential Child Sexual Abuse Material* | 2,812 |

| Violence | 1 |

| Total | 23,498 |

Complaints received against decisions (Art. 15(1)(d) DSA)

The table below shows the number of appeals from users against decisions to remove their content or to impose restrictions against their account. Appeals include requests for additional information about the corresponding removal or restriction.

| Appeals – Account Restrictions | Number of Appeals |

| Total Account Appeals | 2,631 |

| Decision Upheld | 2,613 |

| Account Reinstated | 18 |

The median time to resolve these complaints was just under 18 days.

| Appeals – Content Removals | Number of Appeals |

| Total Content Appeals | 2,755 |

| Decision Upheld | 1,871 |

| Content Reinstated | 884 |

The median time to resolve these complaints was just under 7 days.

Out-of-court dispute settlement (Art. 24(1)(a) DSA)

To our knowledge, no disputes have been submitted to out-of-court settlement bodies during the reporting period.

Suspensions for misuse (Art. 24(1)(b) DSA)

Number of accounts banned: 23,498

Number of accounts actioned for repeatedly submitting unfounded removal requests: 317

Human resources (Art. 42(2)(a) and (b) DSA

It is vital to note that images and videos are not published on the platform until they have been reviewed by a human moderator, and that our moderators are not subjected to any content review quotas. They are directed to review content and approve it if they’ve determined that the content does not violate our terms of service. Therefore, increasing the number of moderators would primarily impact the speed at which content is published on Punchporn, with little additional effect on the volume of illegal or incompatible content that is actually disseminated.

Qualifications and linguistic expertise of HR dedicated to content moderation

All moderators review and assess content in a wide variety of languages and employ several tools to assess this content. All metadata is scanned against our Banned Word Service which contains a library of over 40,000 banned terms across more than 40 languages (Including 21 EU languages) prior to reaching moderators. Moderators then employ translation tools to evaluate the metadata to ensure that the text is compliant. Audio content is assessed by moderators who either use translation/transcription tools or who understand the spoken language in the content. In cases where the audio content cannot be understood the content is rejected as we are unable to meaningfully evaluate potential compliance issues. In all cases moderation is a collaborative task where moderators are encouraged to solicit opinions from their co-workers, senior team members, leads, and managers when reviewing content.

Training and support given to content moderation HR

All moderators receive extensive training over a 3-month period that involves theoretical and practical exercises, job shadowing, and a final exam that requires a perfect score to pass. Once the fundamentals of the compliance guidelines are confirmed the moderators are then supervised on all their review for a period of time. Any moderation errors are addressed and corrected to ensure consistent application of the guidelines.

We use two different virtual care platforms (North America & Europe) that give moderators access to a variety of health and wellness professionals. We also use an additional program which provides moderators with further, complementary support and tailored wellness programs consisting of fitness/nutrition/life coaches, counsellors, and medical professionals.

Information on the average monthly recipients of the service for each Member State

Relevant period: 1 February 2024 – 31 July 2024

| Country | Monthly Average |

| Austria | 549,097 |

| Belgium | 1,132,161 |

| Bulgaria | 380,356 |

| Cyprus | 101,346 |

| Czechia | 356,668 |

| Germany | 4,992,442 |

| Denmark | 541,698 |

| Estonia | 106,006 |

| Spain | 3,385,833 |

| Finland | 294,077 |

| France | 5,432,986 |

| Greece | 663,651 |

| Croatia | 214,982 |

| Hungary | 502,393 |

| Ireland | 403,400 |

| Italy | 2,533,391 |

| Lithuania | 204,276 |

| Luxembourg | 23,563 |

| Latvia | 111,437 |

| Malta | 70,706 |

| Netherlands | 2,461,258 |

| Poland | 1,429,743 |

| Portugal | 1,199,342 |

| Romania | 617,934 |

| Sweden | 1,251,363 |

| Slovenia | 223,376 |

| Slovakia | 253,593 |

| EU Total | 28,137,511 |

Due primarily to deduplication of individual users who may access the platform in multiple EU Member States during the same month, the total sum of EU Member State counts may not exactly equal the EU number. While we have employed reasonable and rigorous processes to publish the most accurate figures, imperfections are probable.